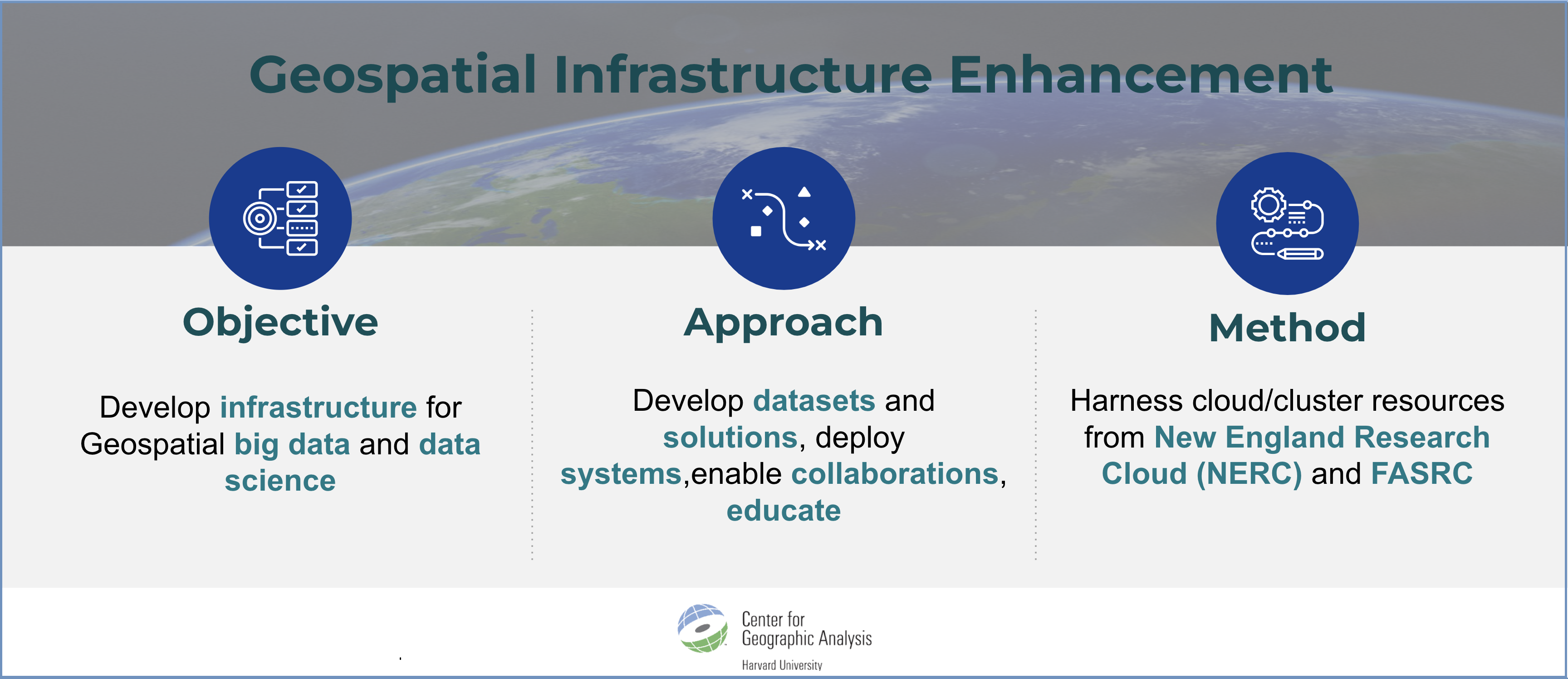

In the rapidly evolving field of geospatial big data, the demand for robust infrastructures capable of handling big data while ensuring cost efficiency and performance has become paramount. Funded by the Office of Vice Provost for Research, the goal of this project is to empower researchers by enabling the execution of geospatial big data projects across diverse use cases and provide ongoing support for CGA service projects. Leveraging computing resources at New England Research Cloud (NERC) and FASRC, we developed a series of products and solutions to make geospatial analytics easier, faster, cheaper and more effective for Harvard researchers. This project also yields insights into best practices and strategies for developing resilient infrastructures tailored to the demands of geospatial big data and data science.

Integrated multiple sources of big data to produce standardized and open-source datasets:

Fostered collaboration with industry technology providers:

- Heavy.ai, a real-time GPU based analysis platform, has made its license available for university-wide use until 2025.

- Istari.ai, a comprehensive business intelligence webAI platform, has made its trial license available to CGA, Harvard Business School’s Baker Library and the Department of Government.

Deployed complex data science systems on NERC with free or educational licenses:

- KNIME on NERC (free) provides an end-to-end data science platform for up to 100 concurrent users to conduct workflow-based reproducible and replicable geospatial analytics.

- ArcGIS Enterprise Server on NERC (educational license) provides a robust geospatial platform, serving as a private and secure system exclusively for Harvard researchers.

Developed unique geospatial big data analytics applications:

- RINX 2.0, a containerized climate raster information extraction system for Harvard School of Public Health which calculated 163 Million climate statistics in 1.5 days.

- RapidRoute, an open source app for shortest travel time calculation for Harvard School of Dental Medicine and Harvard Medical School which has enabled routing calculations at a rate of 20,000 points/minute with results close to Google’s.

- Optipath, a cloud based system for optimal route calculations using ArcGIS Enterprise for Harvard Kennedy School which processed 20 GB of raster data in 1.5 hours.

- Billion Object Platform (BOP), a Heavy.ai based real-time spatio-temporal analysis and visualization platform, currently used by the Department of Government to extract Korean and French tweets for explaining levels of political animosity in these countries.

- Customized BOP Dashboard for the Out of Eden Walk project funded by the National Geographic Society which offers an excellent opportunity for the public to learn about geographic, cultural, and environmental factors along the path of human genetic migration.

Delivered training and publications:

- A workshop on Python for Geospatial Big Data/Data Science was taught in-person. Its video version is now open-source on YouTube and included in FAS RC’s official training material.

- Published 11 user manuals, 5 media articles, 7 peer-reviewed articles or presentations, 1 webpage, and 12 open-source GitHub repositories.

These products and solutions have garnered excellent feedback for their efficiency and scalability from researchers across Harvard, demonstrating their real-world impact.

For the next steps, we plan to build a self-service platform BOP to handle:

- Archival GeoTweets collection of 10 Billion tweets for university-wide use

- Dun & Bradstreet data catering to the specific needs of Harvard Business School.

We also plan to develop a system for effective global routing analysis.